What we get wrong about Artificial Intelligence

The challenge is human-computer interaction design, not technology

These are not the AI challenges you’re looking for

Artificial intelligence (AI) is one of the most hyped terms in the 21st century, and yet one of the most misunderstood.

Very often, when talking about AI, we like to automatically couple it with other terms such as Machine Learning, Deep Learning, and Neural Networks. This makes it sound like over 90% of AI is this kind of statistical algorithm that only PhDs can understand.

This is where we are dead wrong about AI.

After many years of building data and AI products as a data scientist and entrepreneur, I realized that the primary challenge of building AI solutions lies not in building an efficient system, but rather in human-centered design.

While automated learning and classification algorithms are vital to the development of an artificially intelligent system, they only serve as enablers of true intelligence. These algorithms are necessary to develop AI, but not sufficient.

What this means is that the improvement of machine learning algorithms over the years has made it possible for machines to perceive the world almost like humans do. However, in order for a machine to think and act like a human, we must look elsewhere for the answer.

Over the thousands of years of evolution, humans developed unique ways of interpreting and thinking about the world. In order for AI to have a significant impact on our society, it must understand not only how to act like a human, but also how to think like us.

Unfortunately, while the information revolution has enabled us to collect petabytes of data on how we act in a certain situation, not much data has been collected on how we think. This makes it impossible to properly train an AI system.

That’s why we must shift our focus from technology to interaction design. We need to start working on large-scale interaction systems that enable machines to rapidly communicate and collaborate with humans. Machines need to start learning how we conceptualize the world.

What this means for AI researchers and companies, is that the true future of AI lies in design, in an AI’s ability to interact with and learn from humans, and in understanding human contexts — not in more powerful CPUs and algorithms.

This also means technical prowess will become less and less important in building a great AI, relative to deep empathy toward the needs and challenges of the end users who will be interacting with these AI systems.

In this article, I will take an in-depth dive into modern AI technology systems, and demonstrate where AIs today fall short at compared to human intelligence.

Then, I will discuss why learning by interaction has been one of the best ways, if not the only way, for both AIs and humans alike to learn about our world.

Finally, I will offer some suggestions on how we can create these interaction systems that will ultimately enable true artificial intelligence.

Current AI closely mimics the human thinking process, but falls short in learning on its own

Most of the AI systems on the market mimics the human information processing model in psychology. Therefore, it is vital to start our discussion with how an intelligent system like our brain processes information (you can read more about information processing here).

In general, an intelligent system processes information in three very distinctive stages: reception, interpretation, and learning.

Reception is the process in which some receptors (e.g. eyes or ears of the human body) receives signals from the environment, and send those signals to a processing agent (i.e. the brain) in formats that are interpretable by the processing system (i.e. electromagnetic signals).

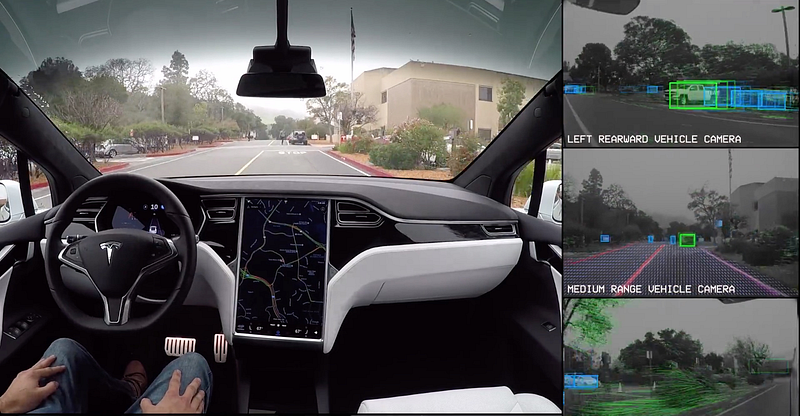

In AI, examples of these receptors include the cameras on Tesla’s self-driving cars, the Amazon Echo for Alexa, or the iPhone for Siri.

Then comes the interpretation process, in which the processing agent (i.e. the brain) performs three operations to the data sent by the receptors:

First, it identifies several objects of relevance from the data (i.e. recognizing that there is a red round shape).

Then, it goes into a library of references (i.e. the human memory), searches for references that will help it identify the objects, and then identifies them (i.e. recognizing that the shape is an apple).

Finally, based on the current state of the entire system (i.e. how hungry you are), the processing agent (i.e. the brain) determines the importance of each piece of information it receives, and present to the users only the information that passes a certain threshold (in humans, this is called attention). That’s why when you are hungry, you are more likely to see apples and food compared to other objects.

In AI, interpretation usually happens in a large information processing system on the cloud, using sophisticated machine learning algorithms such as neural networks.

With recent developments in machine learning and game-playing algorithms (especially in deep neural networks), AI systems can identify objects based on a body of reference exceptionally well, enabling amazing innovations such as self-driving cars to develop.

However, we cannot stop here, since the library of reference used by the processing agent is limited, especially in the beginning of its life cycle (a baby might not know what an apple even is).

That’s why learning needs to occur to continuously to expand this library of references for the system to reach its full potential.

For modern AI systems, this is where the real challenge lies.

As the current technology stands, AIs are really good at classifying a situation into categories and optimizing based on the parameters provided. However, it cannot create these categories or parameters from scratch without help from human developers.

This is because AI “sees” the world as multiple, purely mathematical matrices, and does not have the intrinsic ability to empathize with human experiences unless we teach it to.

Furthermore, during the training of these classification models, AIs are only given the outcomes of each specific situation, instead of the entire thinking process and rationale that led to that specific outcome, which makes comprehension impossible.

For example, an AI system might be able to programmed recognize the image of a baby, but it will not understand why recognizing the image of a baby is needed in the first place since that information was never given to the AI by the engineers who created it.

In a way, an AI is like a super intelligent newborn baby — while you can show it all the knowledge in the world, it cannot understand how the world really works unless the AI actually gets out into the world to learn from experience.

Because AI lacks the ability to create its own contexts, most of the commands we are asking Siri and Alexa are actually manually programmed by the engineers at Apple and Amazon. It’s also why Amazon spends so much effort to create an open eco-system around Alexa, to encourage companies to program skills on its Alexa platform.

Because they are so human-dependent, the current AI systems such as Alexa cannot really develop new context and learn like humans. Therefore, it is really not accurate to call them “artificial intelligence”.

Interaction can solve the learning problem in AI

So how can we create an AI system that can empathize with the human world?

The answer is rather simple — we teach it to interact with humans and ask questions.

As I mentioned earlier, the biggest challenge of training AI human interaction at the moment is the lack of detailed, interaction-level data about the human thought process.

In order to collect this level of data, we need to ramp up AI’s role not only as a data consumption (using data generated elsewhere) agent, but also data collection agent (generating its own datasets).

What does this mean? It means we need to design AIs such that they can interact with humans to understand not only what the human wants, but why they want it. Just like an apprentice to a master craftsman, AIs need to learn on the job.

In order for AI to have this level of interaction with human, we must first shift our perception of AI.

Right now, we perceive AI as this omnipotent black box that can solve all the problems automatically without any requirement of human input.

For most people we think that we can give AI a general command such as “Alexa, run my business for me,” and expect Alexa to run our businesses for us as we stay in bed and watch Netflix.

However, this is much too high a standard for artificial intelligence, even for intelligence in general.

Let’s use consulting as an example. When a human consultant interacts with clients, they never pull the client aside and tell them, “Hey we understand your business needs perfectly. We can do everything to improve your business for you, you just need to sit and watch.”

Instead, they spend hours and hours sitting down with the clients, asking carefully crafted questions to better understand the needs of their clients, and ultimately work with the client to create a solution that is tailored to the needs of the client.

The success of a consulting project really hinges on a consultant’s ability to draw out the needs of the client and to deliver the most value for their client based on their constraints.

If this is the standard that we hold ourselves to when interacting with one of the more intelligent segments of human society, we should not expect AI to automatically understand all our needs and provide the perfect solution without interacting with us.

How can we create interactive AIs?

In order to give AIs the ability to ask intelligent questions the way consultants do, we must place a lot less emphasis on creating the most powerful machine learning algorithm. Rather, we ought to focus on designing systems that enable maximum interaction between AI and human users while achieving the task the AI is designed for.

More practically, it means that AI product managers should focus less on hiring engineers that are good at algorithm design, and more on recruiting human-centered designers who can talk with the end users of the AI and facilitate interaction between the users and the AI. The task of these designers is to identify the best ways for AI to work with users to improve both the AI’s own intelligence, and the lives of human users.

Essentially, creating interactive AIs demands that AI product managers (like myself) build AIs with the goal of understanding and serving people, instead of replacing people.

At the same time, the development of AI must also be much more transparent than it is right now. As design firm IDEO points out in “A Message to Companies That Collect Our Data: Don’t Forget About Us,” businesses today must design for transparency and user control if they want to build trust with customers.

At the moment, many AI companies refuse to demystify what is actually happening under the hood to their users, not because they fear competitors stealing their technology secrets (honestly, there is not that much to steal in the first place). Rather, they often fear that if their users know how manual the AI is, users will lose trust in the company.

While this fear is valid, to achieve the highest level of intelligence possible for AI technologies, users must be intensively involved in the design iteration process. Therefore, while transparent might harm the early adoption of an AI product in the short term, the long-term benefit of more transparency to the entire AI system is limitless.

Final Thoughts

The fact is plain and simple: the AI revolution is inevitable. Like it or not, AI will play a large role in our workforce in the next few decades.

However, to make AIs truly useful for our society, they need to understand not only what we humans do, but also why we do it, and this learning requires AIs to jump out of the black box and interact with its users.

This means that for years to come, AI will be less of a technology and coding problem. AI will be more of a design problem in which human-centered designers who empathize with end users will play a critical role.

Ultimately, I can see a future world in which AIs and humans exist in harmony, with each party playing its unique role in human society. Only then will the AI revolution result in prosperity for humanity.

AI-Human Harmony.